Intro to Grad-CAM - CNN的可视化

The Grad-CAM (Gradient-weighted Class Activation Mapping) is a generalization of CAM and is applicable to a significantly broader range of CNN model families.

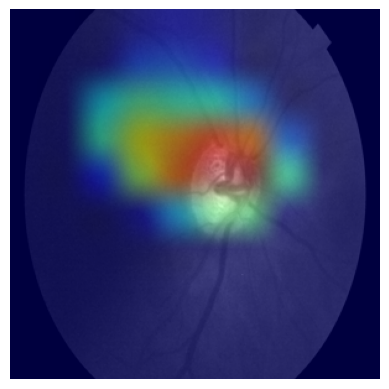

The intuition is to expect the last convolutional layers to have the best compromise between high-level semantics and detailed spatial information which is lost in fully-connected layers. The neurons in these layers look for semantic class-specific information in the image.

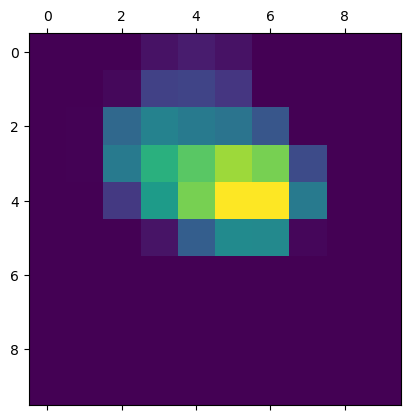

$$L_{Grad-CAM}^c = ReLU(\sum_k\alpha_k^cA^k)$$

where $$\alpha_k^c = \frac{1}{Z}\sum_i\sum_j\frac{\partial{y_c}}{\partial{A_{ij}^k}}$$

The $L_{Grad-CAM}^c$ is the class-discrimitive localization map for any class c.

And the $\alpha_k^c$ represents a partial linearization of the deep network downstream from A, and captures the ‘importance’ of feature map k for a target class c. The $\frac{1}{Z}\sum_i\sum_j$ stands for the global average pooling.

We apply a ReLU to the linear combination of maps because we are only interested in the features that have a positive influence on the class of interest, i.e. pixels whose intensity should be increased in order to increase y. Negative pixels are likely to belong to other categories in the image.

The formula inside the ReLU bracket follows the same principle as the CAM formula, which is to perform a linear summation of the feature maps from the last convolutional layer, weighted accordingly, to obtain the activation map.

The code below uses the gradients of retinal target flowing into the final convolutional layer to produce a coarse localization map highlighting the important regions in the image for predicting the concept.

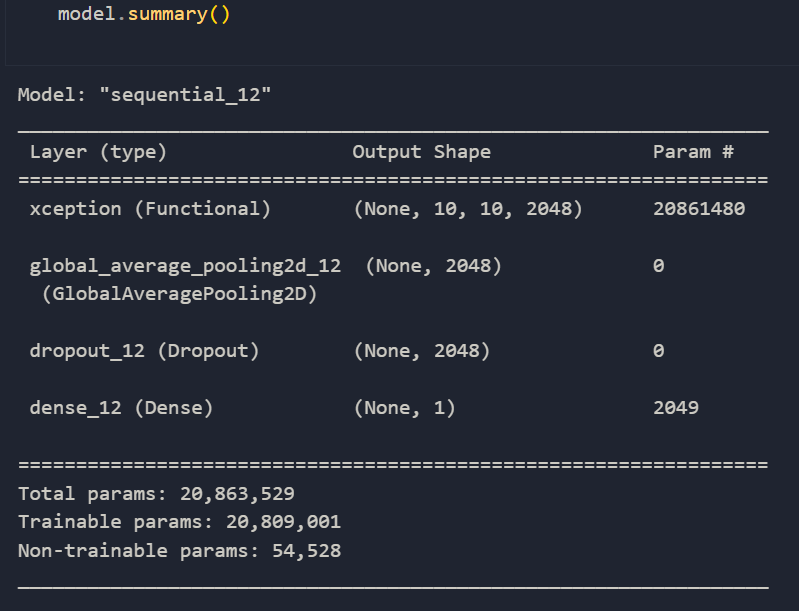

The model used in this approach has a following structure:

1 | base_model = model.get_layer('xception') |

References ⭐

Intro to Grad-CAM - CNN的可视化

https://janofsun.github.io/2023/12/18/Intro-to-Grad-CAM-CNN的可视化/