LangChain Callback Deep Dive - Why Standard Tools Trigger Nested Run Events

I’ve recently been developing a multi-agent chatbot featuring a reasoning agent and a conversational agent, each equipped with one or more tools. Notably, the reasoning agent utilizes a ReAct prompt, which can be readily constructed using LangChain. One of the involved tools is a retriever, and the retrieval process can become time-consuming with a large database and a significant number of results to fetch. To address this latency, I’ve decided to stream the reasoning process to the user interface. LangChain conveniently supports this through its CallbackHandler functionality.

The following is the initial streamed reasoning content:

1 | ['conversation'] Chain starts: Could you provide more details? |

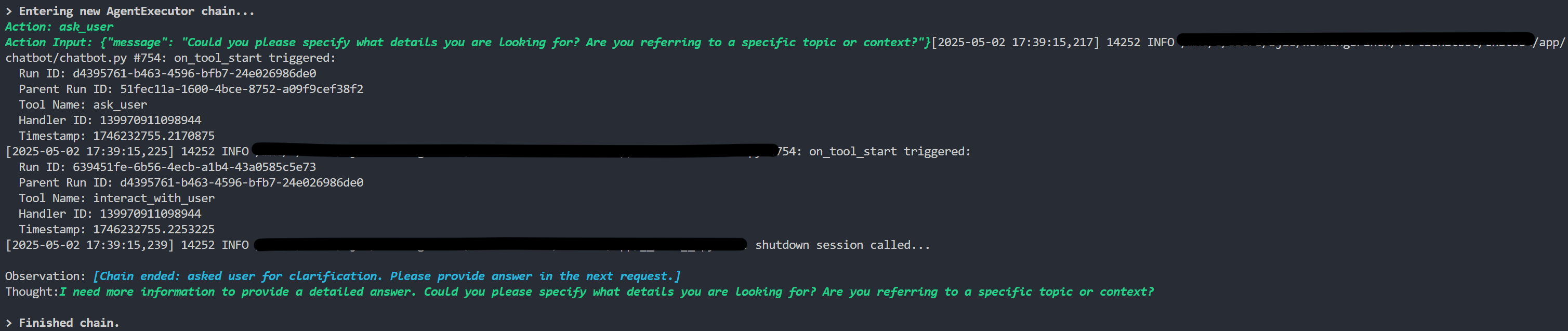

The repeated ‘Tool starts/ends’ messages were initially quite confusing. I suspected a tool-calling error might be causing the LLM to retry, but this behavior persisted. Interestingly, this issue didn’t occur with the ReAct agent.

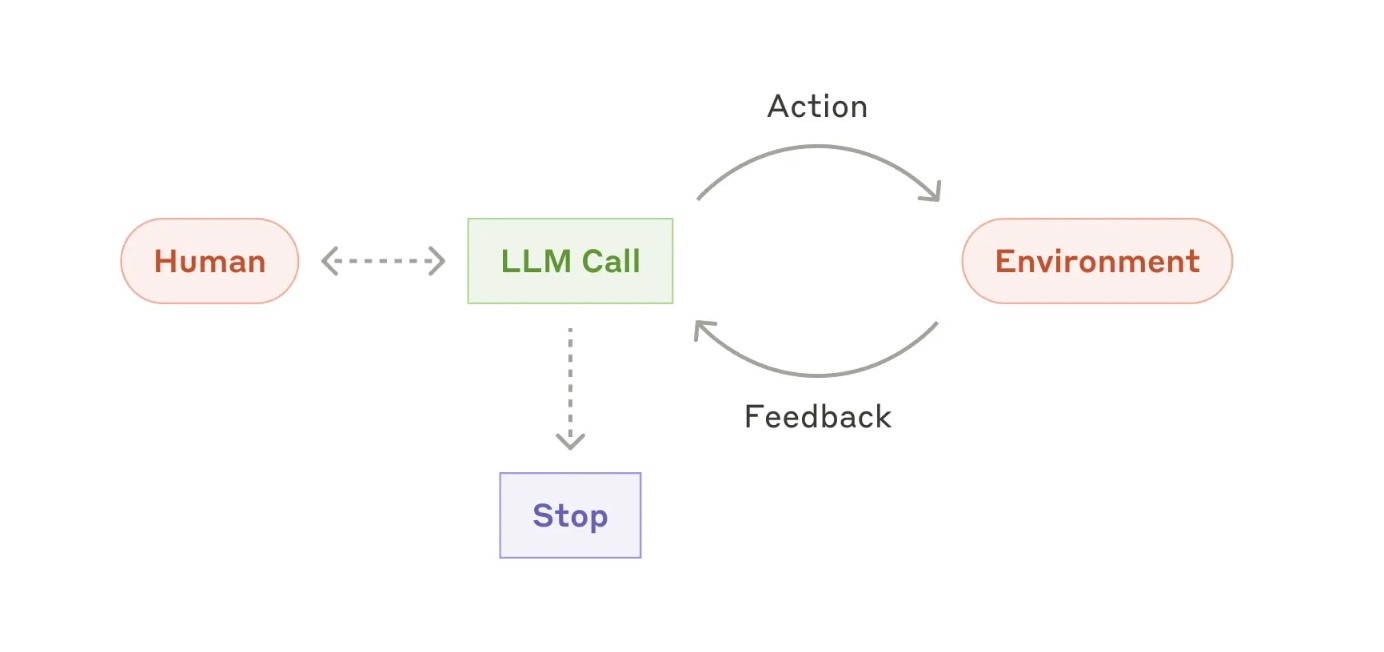

As this log clearly shows, LangChain’s tracing and callback system treats Tool execution as a two-level process:

- The Tool Run represents the call to the Tool object (like “ask_user”).

- The Function Run, a child of the Tool Run, represents the execution of the underlying Python function (like “interact_with_user”).

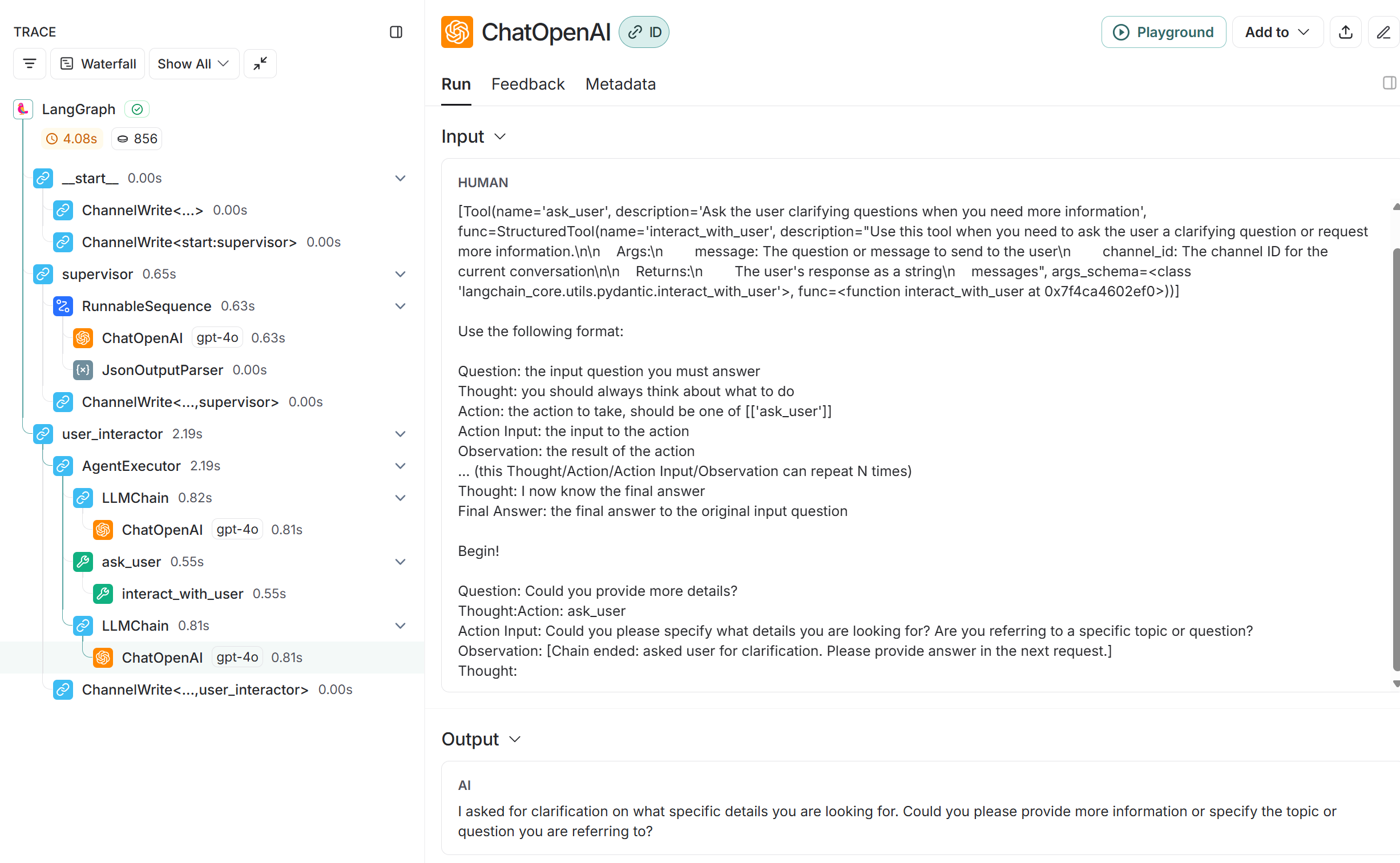

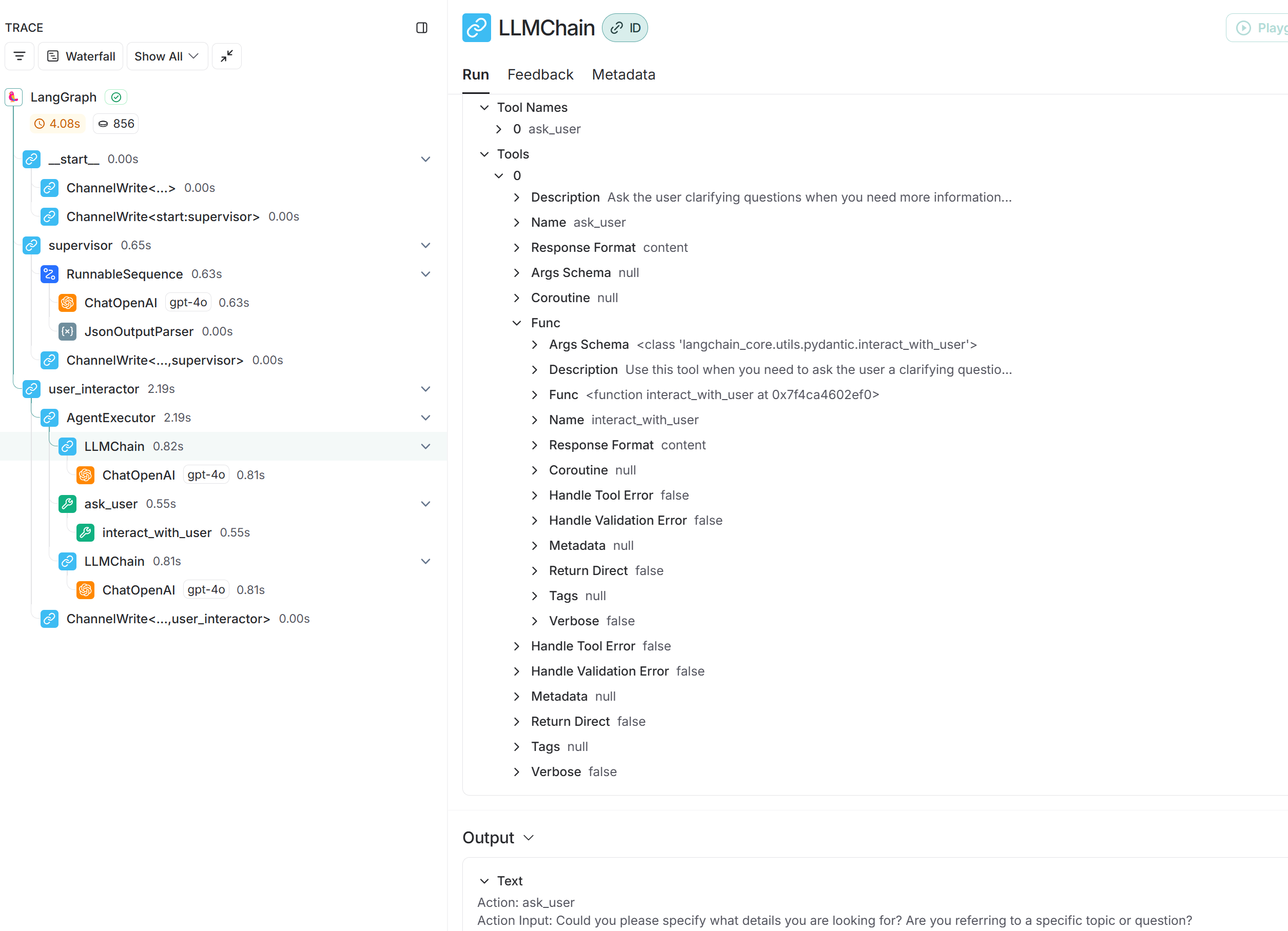

Consequently, I decided to use LangSmith to gain better observability into the agent’s workflow.

Let’s go through this process step by step.

[‘conversation’] Chain starts: Could you provide more details?

- LangSmith Trace: This corresponds to the very beginning of the overall LangGraph execution receiving the initial input. Your SSECallbackHandler’s on_chain_start likely logs the input for the root run.

[‘conversation’] Chain starts:

- LangSmith Trace: This maps to the start of the AgentExecutor run (duration 2.19s) inside the user_interactor node. The AgentExecutor itself is a type of Chain, and since it was invoked with the conversation tag (via the node’s function), your handler logs its start.

[‘conversation’] The LLM is starting …

- LangSmith Trace: This maps to the start of the first ChatOpenAI call (duration 0.81s). This call is nested within the first LLMChain (duration 0.82s), which is inside the AgentExecutor. This is the agent figuring out what action to take.

[‘conversation’] The LLM ends…

- LangSmith Trace: Maps to the end of that first ChatOpenAI call.

[‘conversation’] Action/Action Input:

- LangSmith Trace: These lines are the result/output generated by the first ChatOpenAI / LLMChain. The Action/Action Input is possibly the output because of the ‘verbose=True’ setted in the AgentExecutor.

[‘conversation’] Chain starts: (The 3rd)

- LangSmith Trace: This maps to the start of the second LLMChain run (duration 0.81s) within the AgentExecutor. This chain likely takes the observation returned by the ask_user tool and decides on the final response for this agent step.

[‘conversation’] The LLM is starting … (The 2nd)

- LangSmith Trace: Maps to the start of the second ChatOpenAI call (duration 0.81s), which is nested inside the second LLMChain. This is the LLM call that generates the final answer text based on the tool’s result. This is the step highlighted in your LangSmith screenshot.

[‘conversation’] The LLM ends… (The 2nd)

- LangSmith Trace: Maps to the end of the second ChatOpenAI call.

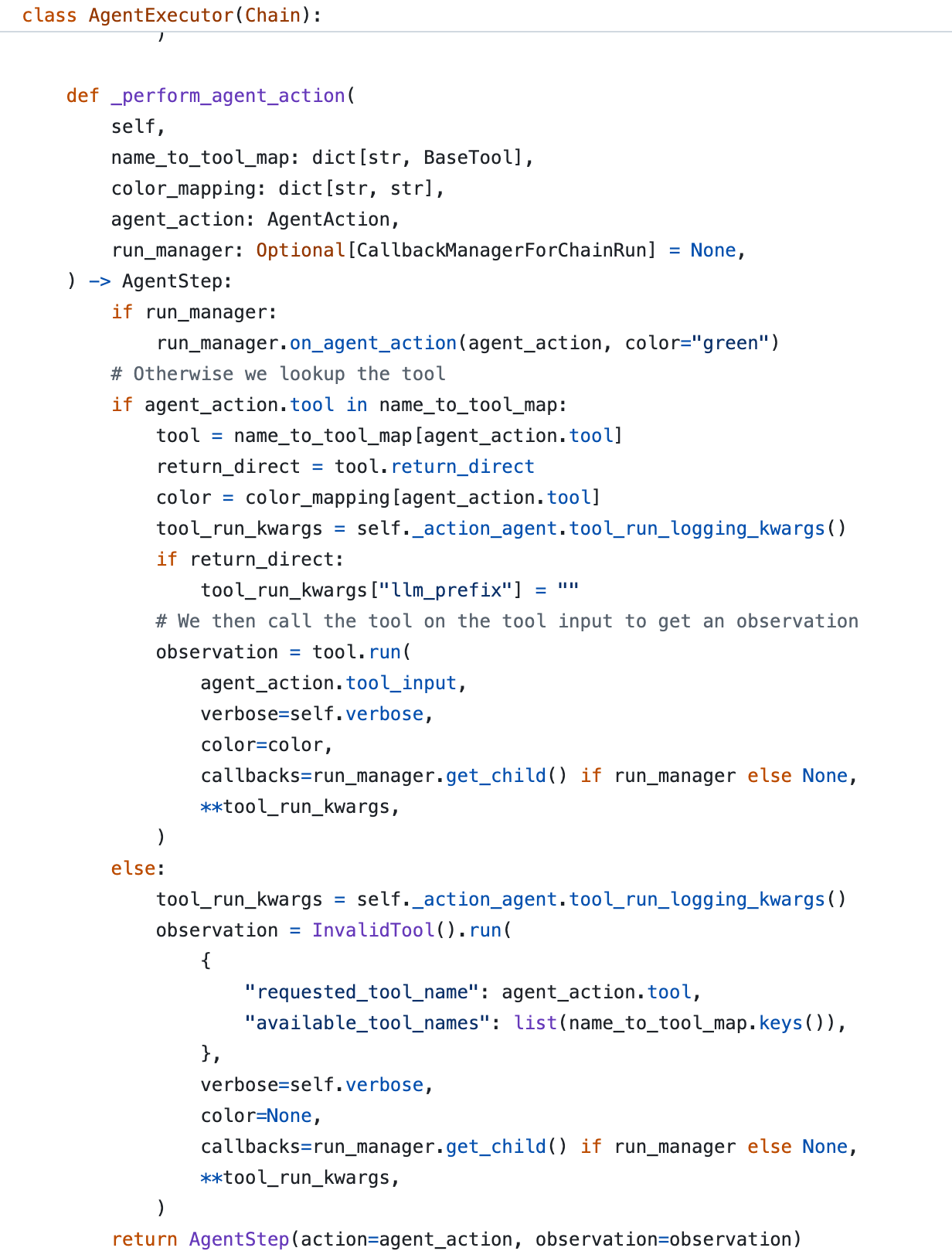

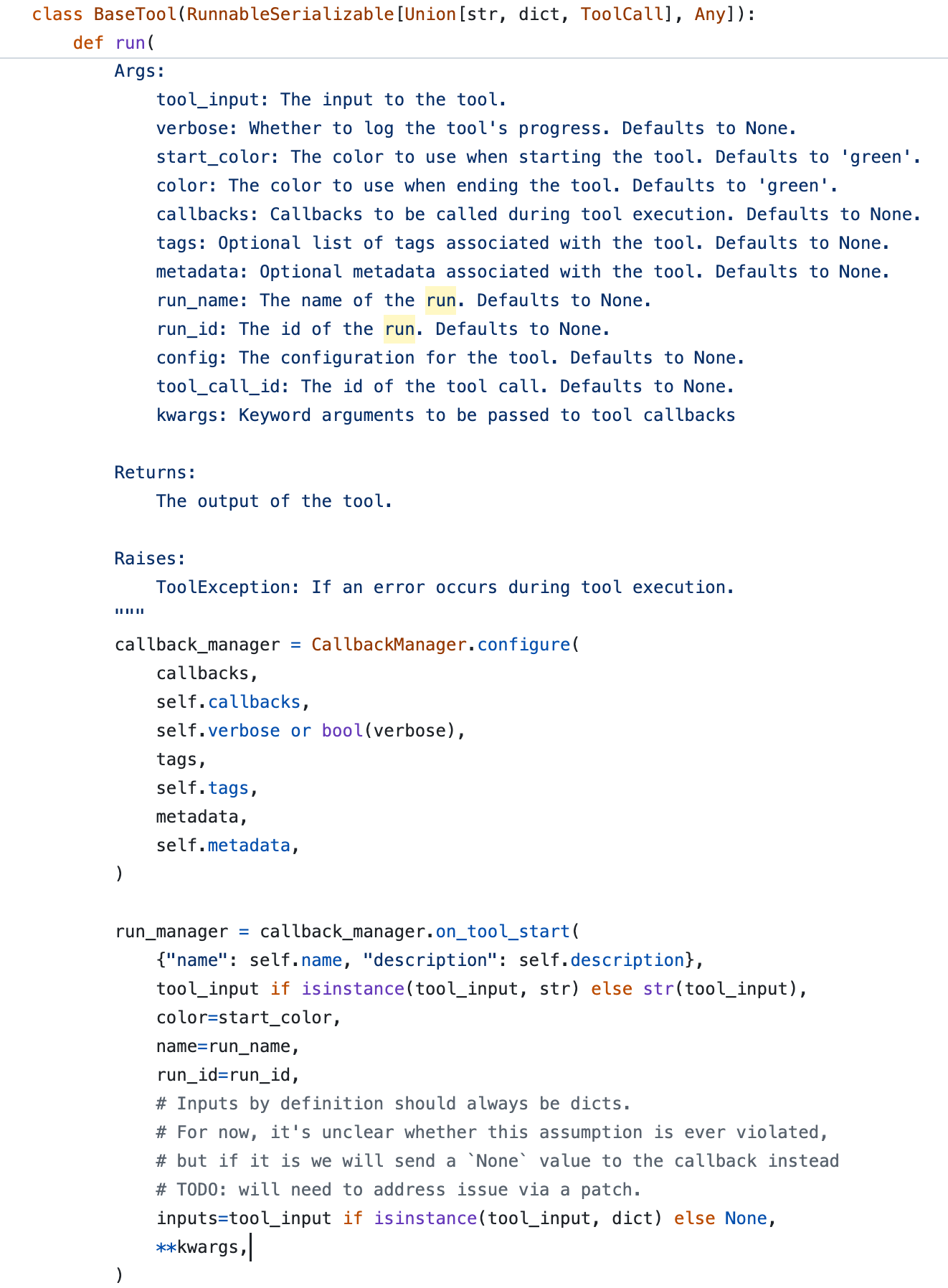

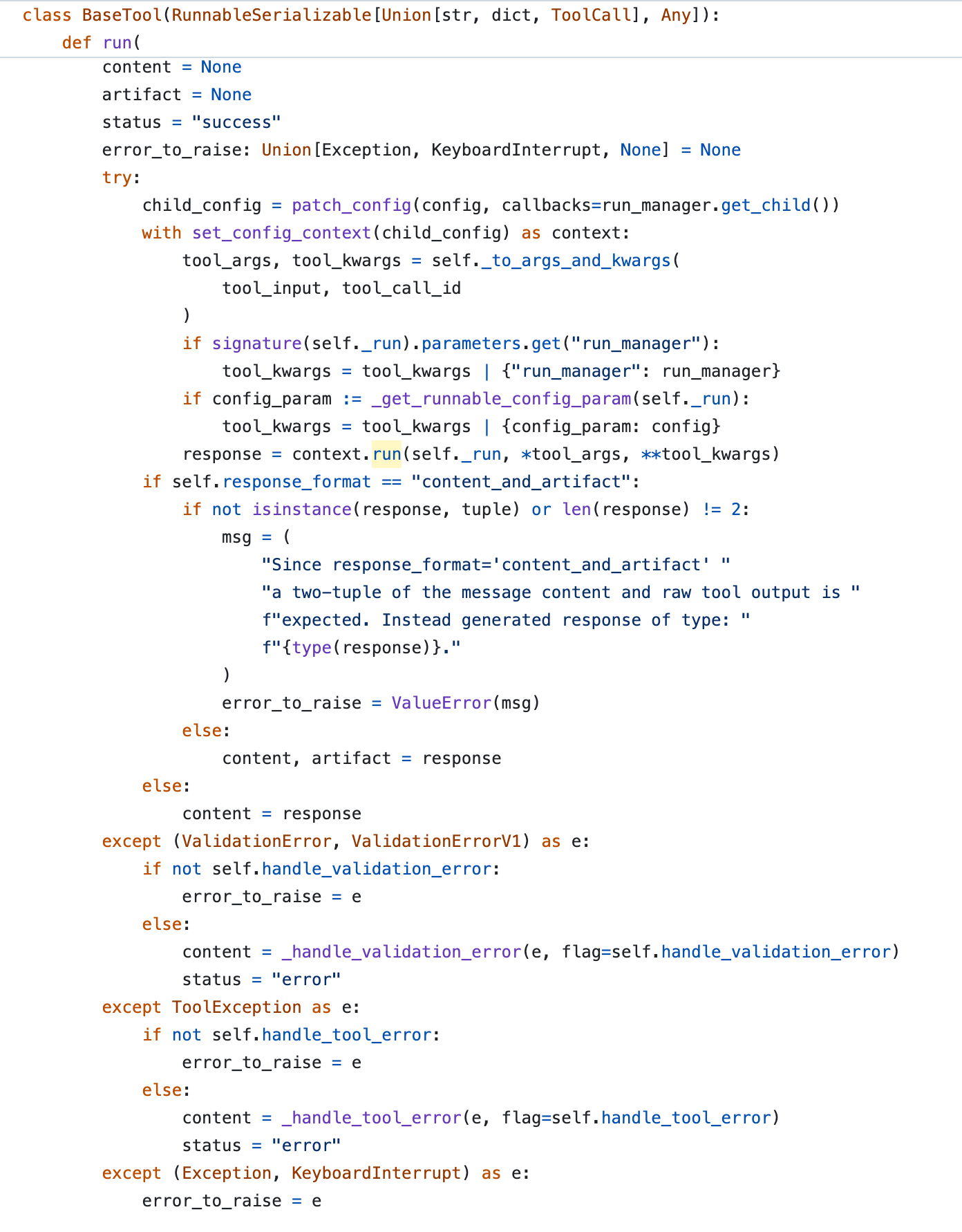

The LangChain source code demonstrates this process, _perform_agent_action->tool.run->callback_manager.on_tool_start:

First on_tool_start (Tool Run):

- Trigger: Explicitly called by callback_manager.on_tool_start(…) directly within the BaseTool.run method.

- Represents: The invocation of the Tool object itself (e.g., name=”ask_user”).

- Hierarchy: Parent run (child of the agent run).

Second on_tool_start (Function Run):

- Trigger: Implicitly triggered by the Runnable execution framework when context.run(self._run, …) is called, using the child callback manager obtained via run_manager.get_child().

- Represents: The execution of the underlying Python function logic within self._run (e.g., identified as name=”interact_with_user”).

- Hierarchy: Child of the first Tool Run.

The involved tools are like the following:

1 | forti_retriever = FortiRetriever() |

It looks like for Tools that just wrap a normal Python function, the framework tracks two layers: the tool call itself, plus the function’s execution.

But for Tools made using helpers (like create_retriever_tool) that wrap special LangChain objects (like a BaseRetriever), it might only track one layer: just the main tool call. The internal stuff (like the retriever getting documents) is treated as part of that single tool run, instead of getting its own separate child run triggering on_tool_start.

References ⭐

LangChain Callback Deep Dive - Why Standard Tools Trigger Nested Run Events

https://janofsun.github.io/2025/05/02/LangChain-Callback-Deep-Dive/